Scientific racism? Chinese professor defends facial-recognition study after Google scoffing

A Chinese professor’s study on revealing criminals based on their facial features has been lambasted by Google researchers, who described it as “deeply problematic, both ethically and scientifically.”

Shanghai Jiao Tong University computer science Professor Wu Xiaolin said that the Google scientists read something into the research that simply isn’t there and started their “name-calling,” the South China Morning Post reported.

“Their charge of scientific racism was groundless,” Wu added, saying that his work was taken out of context and that he was just eager to share his findings with the public.

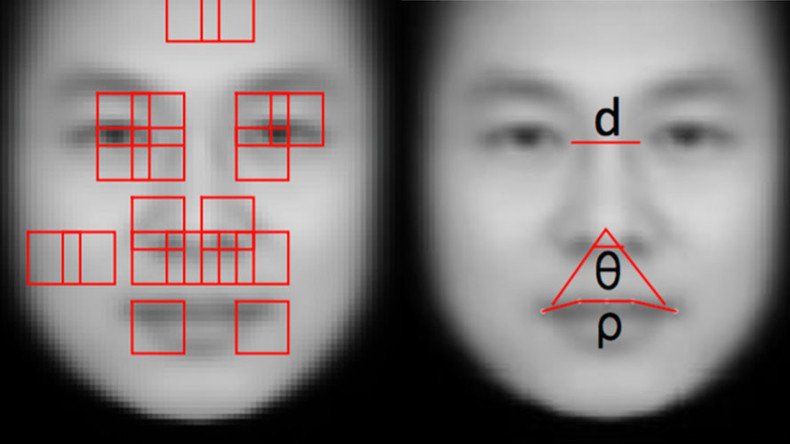

In the research, Wu and his student Zhang Xi described machines that could figure out if someone was a criminal, basing on the analysis on nearly 2,000 Chinese state ID pictures of criminals and non-criminals.

In particular, the Chinese researchers based their research on “race, gender and age,” and noted that “the faces of law-abiding members of the public have a greater degree of resemblance compared with the faces of criminals.” For example, criminals tended to have eyes that were closer together, they added: “the distance between two eye inner corners for criminals is slightly narrower (5.6%) than for non-criminals.”

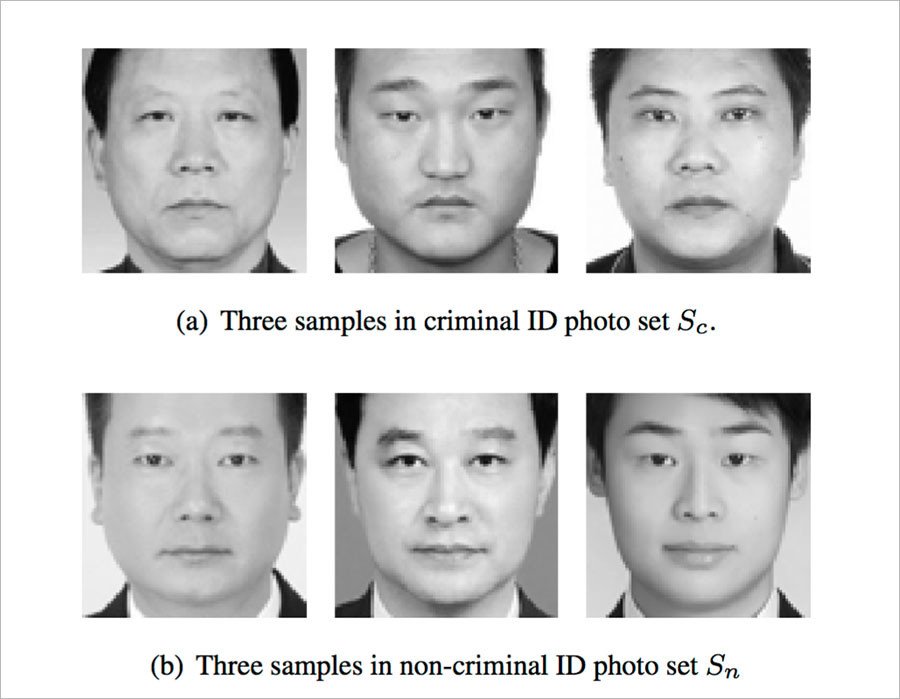

All of the photos are of people between 18 and 55 years old who lack facial hair, facial scars or other obvious markings. Seven hundred and thirty of the images are labeled ‘criminals’, while the others are ‘non-criminals’.

The study was submitted to Cornell University Library’s arXiv resource, a repository for scientific papers, last November.

However, the harsh reaction of Google researchers came only last week, when they said they found the Chinese scientists’ findings “deeply problematic, both ethically and scientifically.” They likened Wu's study to physiognomy, the practice of judging a person's character by their facial features, which is considered a pseudoscience by modern scholars.

“In one sense, it’s nothing new. However, the use of modern machine learning (which is both powerful and, to many, mysterious) can lend these old claims new credibility,” Google researchers Blaise Aguera y Arcas and Margaret Mitchell, and Princeton University Psychology Professor Alexander Todorov, wrote in the paper published online.

Google scientists showed six examples of ‘criminals’ and ‘non-criminals’, noting that the latter seem to be smiling and the supposed ‘criminals’ were frowning.

What the Chinese scientists’ findings show is the “inaccuracy and systematic unfairness of many human judgments, including official ones made in a criminal justice context.”

Google researchers also note the unlikely accuracy rate of the study (about 90 percent), citing another paper, “a well-controlled” study carried out in 2015 by computer vision researchers Gil Levi and Tal Hassner using a convolutional neural net with the same architecture as the Chinese scientists (AlexNet). The net was only able to guess the gender of a face with an accuracy of 86.8 percent.