Google will 'know you better than your intimate partner'

In 15 years’ time, computers will surpass their creators in intelligence, with an ability to tell stories and crack jokes, predicts a leading expert in artificial intelligence. Thus, Google will “know the answer to your question before you ask it.”

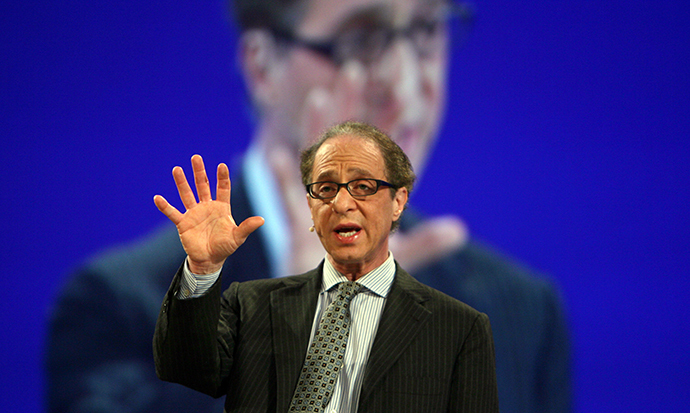

Most people would probably agree that computers are man-made technologies that function inside the strict boundaries of man-made borders. For technologists like Google engineering director Ray Kurzweil, however, the moment when computers liberate themselves from their masters will occur in our lifetime.

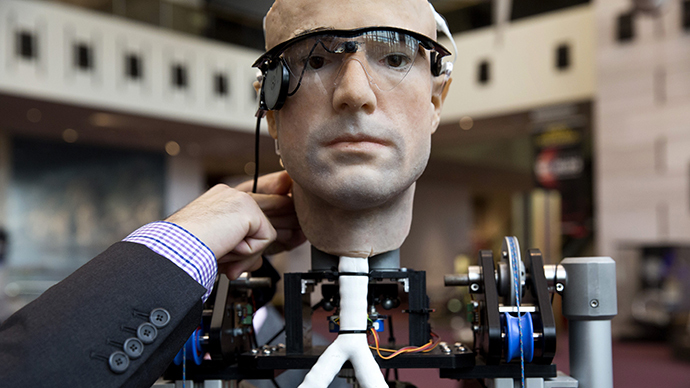

By the year 2029, computers and robots will not only have surpassed their makers in terms of raw intelligence, they will understand us better than we understand ourselves, the futurist predicts with enthusiasm.

Kurzweil, 66, is the closest thing to a pop star in the world of artificial intelligence, the place where self-proclaimed geeks quietly lay the grid work for what could be truly described as a new world order.

The internet visionary is avidly working towards an unseemly marriage of sorts between machine and man, a phenomenon he has popularly dubbed “the singularity.” The movement, which also goes by the name ‘transhumanism,’ is anxiously awaiting that Matrix moment when artificial intelligence and the human brain will merge to form a superhuman that never has to Google another nagging question again.

In this robot-dominant world, humans, starring in some cheapened knockoff of the biblical Creation story, will have downloaded themselves into their own technology, becoming veritable gods unto themselves.

In the meantime, computers will continue to humiliate and humble their human creators, much like IBM’s DeepBlue computer did in 1997 when it handily outmaneuvered world chess champion Garry Kasparov.

The next step for Kurzweil is to devise programs that allow computers to understand what humans are saying, which represents the sum total of internet usage.

"My project is ultimately to base search on really understanding what the language means," he told the Guardian in an interview. "When you write an article, you're not creating an interesting collection of words. You have something to say and Google is devoted to intelligently organizing and processing the world's information.

"The message in your article is information, and the computers are not picking up on that. So we would want them to read everything on the web and every page of every book, then be able to engage in intelligent dialogue with the user to be able to answer their questions."

However, these sensational advances in artificial intelligence, which is continuing with little public debate, comes hot on the tail of the Snowden leaks, which revealed a high level of collusion between the National Security Agency (NSA) and major IT companies in accessing our personal communications.

Now, Google, the global vacuum sweeper of information, is showing a marked interest in powerful techniques and technologies that will ultimately make spying on its subscribers a bit redundant, since computers will already know every detail of our personal lives – even our deepest thoughts.

The dawn of the Google ‘thought police’?

Kurzweil summarized his Google job description in one succinct line: "I have a one-sentence spec. Which is to help bring natural language understanding to Google. And how they do that is up to me," he told The Observer.

Understanding human semantics, he says, is the key to computers understanding everything.

But here is where the new advances in artificial intelligence become not only murky, but potentially sinister: “Google will know the answer to your question before you have asked it, he says. It will have read every email you've ever written, every document, every idle thought you've ever tapped into a search-engine box. It will know you better than your intimate partner does. Better, perhaps, than even yourself.”

"Computers are on the threshold of reading and understanding the semantic content of a language, but not quite at human levels. But since they can read a million times more material than humans they can make up for that with quantity….Which is to say to have the computer read tens of billions of pages. Watson (an IBM artificial intelligence system) doesn't understand the implications of what it's reading.

“It's doing a sort of pattern matching. It doesn't understand that if John sold his red Volvo to Mary that involves a transaction or possession and ownership being transferred. It doesn't understand that kind of information and so we are going to actually encode that, really try to teach it to understand the meaning of what these documents are saying,” Kurzweil told The Guardian.

For the pioneers of this brave new technology, such technological capabilities, where nothing is considered too personal or invasive, is an altogether positive thing.

Although the traditional image of robots wavers between an obedient domestic servant that never complains about working overtime, and an automated factory machine that has revolutionized the workplace, the reality of the technology may be far more menacing than people realize. In any case, the issue demands serious discussion.

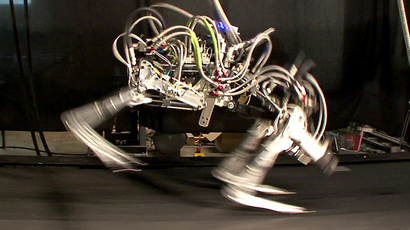

Consider that Google has already purchased a number of robotics firms, including Boston Dynamics, the company that develops menacingly lifelike military robots, as well as British artificial intelligence startup DeepMind, a London-based firm that has “one of the biggest concentrations of researchers anywhere working on deep learning, a relatively new field of artificial intelligence research that aims to achieve tasks like recognizing faces in video or words in human speech,” according to MIT Technology Review.

MIT even risked a telling observation: “It may sound like a movie plot, but perhaps it’s even time to wonder what the first company in possession of a true AI (artificial intelligence) would do with the power that it provided.”

Google has also added to its ranks Geoffrey Hinton, a British computer scientist and the world's top expert on neural networks, as well as Regina Dugan, who once headed the Defense Advanced Research Projects Agency (DARPA), the ultra-secret US military agency that has created a number of controversial programs, including the so-called Mind's Eye, a computer-vision system that is so powerful it can monitor the pulse rate of specific individuals in a crowd.

Peter Norvig, Google's research director, commented recently that the internet giant employs "less than 50 percent but certainly more than 5 percent" of the world's top experts on machine learning.

In 2009, Google helped to finance the Singularity University, an institute co-founded by Kurzweil dedicated to “exponential learning.”

But Google is not alone in the rush to acquiring deep learning academics, of which there are only about 50 experts worldwide, according to estimations of Yoshua Bengio, an AI researcher at the University of Montreal. Last year, Facebook announced its own deep learning department and brought on board perhaps the world’s most reputable deep learning academic, Yann LeCun of New York University, to oversee it.

Is there an ‘escape’ option?

Upon further reflection of these diverse types of experts and technologies that Google, and other IT companies, is rapidly bringing into its fold, it does not require much imagination to envision some future state that is policed by super-intelligent robots capable of knowing in advance, like a chess match where one of the opponents is blindfolded, the thinking processes of individuals and groups within society.

To take the scenario one step further, would it be in the interest of the people, not to mention democracy, to allow fully armed ‘Robocops’ to patrol our streets?

Bill Joy, a co-founder of Sun Microsystems, is one of the few individuals in the field who has sounded the alarm over the advances being made in artificial intelligence, going so far as to warn that humans could become an “endangered species.”

“The 21st-century technologies - genetics, nanotechnology, and robotics (GNR) - are so powerful that they can spawn whole new classes of accidents and abuses,” Joy wrote in an article for Wired (“Why the future doesn’t need us,” April 2000). Most dangerously, for the first time, these accidents and abuses are widely within the reach of individuals or small groups. They will not require large facilities or rare raw materials. Knowledge alone will enable the use of them.

“I think it is no exaggeration to say we are on the cusp of the further perfection of extreme evil, an evil whose possibility spreads well beyond that which weapons of mass destruction bequeathed to the nation-states, on to a surprising and terrible empowerment of extreme individuals.”

Francis Fukuyama, an American political scientist, commenting on the rise of this brave new technology in Foreign Policy (Sept. 1, 2004), wrote: “Modifying any one of our key characteristics inevitably entails modifying a complex, interlinked package of traits, and we will never be able to anticipate the ultimate outcome.”

Perhaps that is the gravest danger associated with the race toward a “posthuman” condition, and that is its sheer unpredictability and risk of abuse in the wrong hands.