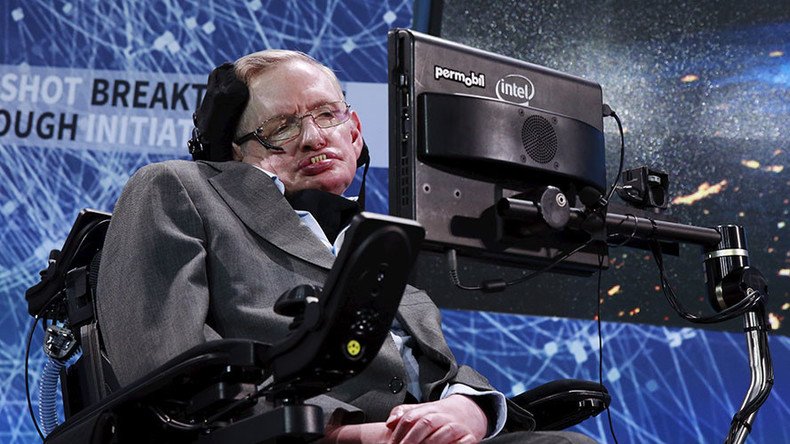

Stephen Hawking warns humans could be ‘superseded’ by supercomputers

Stephen Hawking has warned that humans face being superseded by computers if the race to develop artificial intelligence continues apace.

The renowned physicist and cosmologist issued the stark message at the Global Mobile Internet Conference in Beijing Thursday.

Intelligence is the ability to adapt to change. An inspiring presentation at @theGMIC in Beijing. pic.twitter.com/rv73jMAjOw

— MUNDOmedia (@MUNDOMedia) April 28, 2017

Speaking via video link, Hawking said: “I believe that the rise of powerful AI will either be the best thing, or the worst thing, to happen to humanity. I have to say now that we do not yet know which. But we should do all we can to ensure that its future development benefits us and our environment.

“I see the development of AI as a trend with its own problems that we know must be dealt with now and into the future. The progress in AI research and development is swift and perhaps we should all stop for a moment and focus our research, not only on making AI more capable but on maximizing its societal benefit.”

READ MORE: ‘We’re at most dangerous moment in history of humanity,’ Stephen Hawking warns

Hawking believes technology has advanced to the point where there is no real difference between what can be achieved by a human brain and a computer.

Speech of Artificial Intelligence by Stephen Hawking In GMIC Beijing 2017 @theGMIChttps://t.co/8uZy6I6X7k

— Yicai Global 第一财经 (@yicaichina) April 28, 2017

“Computers can in theory emulate human intelligence and exceed it. So we cannot know if we will be infinitely helped by AI or ignored by it and sidelined or conceivably destroyed by it.

“Indeed we have concerns that clever machines will be capable of undertaking work currently done by humans and swiftly destroy millions of jobs.”

Pointing to how AI has the potential to rid the world of problems like disease, Hawking painted a darker image of a future where machines have developed a will of their own. He also said he feared technological creation could one day become uncontrollable, resulting in humans being overthrown by systems that were built to serve.

“I fear the consequences of creating something that can match or surpass humans. AI would take off on its own and redesign itself at an ever increasing rate. Humans, who are limited by slow biological evolution couldn’t compete and would be superseded.”

READ MORE: Stephen Hawking appears at conference via hologram, discusses Trump (VIDEO)

In January 2015, Hawking, along with other leading lights in science and innovation, signed an open letter calling for research into the potential pitfalls of AI. Signatories included SpaceX founder Elon Musk, Apple’s Steve Wozniak and a number of Google researchers.